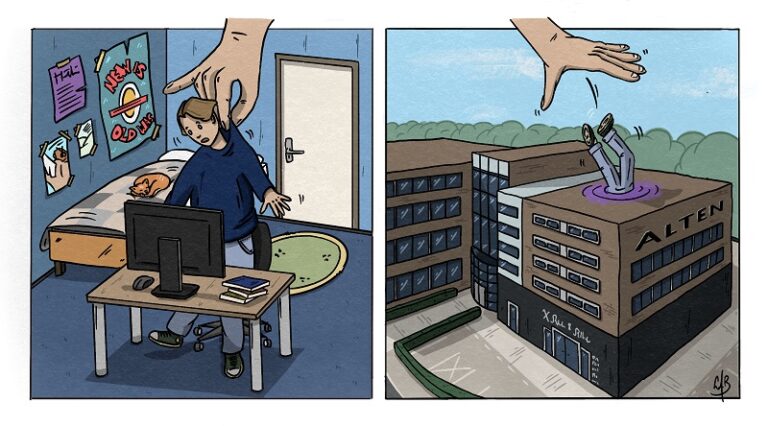

This blog is the first in a series that highlights exciting developments within the world of data platforms. On behalf of ALTEN, I am happy to share these new technologies which we are already successfully applying at our projects.

A new buzzword has entered the already overcrowded vocabulary of the data profession: the data lakehouse . Even the less data-savvy professionals have heard of data warehouses and data lakes, but now a new means of centrally storing data for analytical purposes has emerged. The potential of data lakehouses has even been recognized by Bill Inmon, the father of traditional data warehousing, who recently wrote a book about the value of a data lakehouse within the modern data platform. But, to understand the data lakehouse concept we must first go through a brief history of analytical storage.

The origins of analytical storage

Ever since the start of the digital revolution in the second half of the 20 th century, data is being processed in exponentially increasing amounts. Especially since computers were found to be useful for more than merely performing complex calculations. In the 1960s and 1970s, computers were starting to be used for handling transactional workloads for organizations, thus automating the task of record-keeping which had traditionally been carried out manually. Whenever compute and storage technologies advanced, the volumes of data grew along with them. However, one big problem emerged when organizations tried to analyze the data they had gathered along the way: querying a database for analysis reallocates costly resources from its transactional and operational workloads, thus creating bottlenecks in both processes. Enter the data warehouse : a single source of truth where an organization’s data is centralized in separate databases that are optimized for analytical use.

Old-school data warehouses traditionally take transactional databases and spreadsheets as their input. Ingesting data is done through a model of Extract, Transform, and Load (ETL), where a staging area is used for performing transformations before data is loaded in the data warehouse, because these transformations are resource intensive operations. The traditional ETL process is batch-oriented and therefore ingests large chunks of data at once, following a predetermined schedule. Storage within data warehouses is done according to the relational model, which has been the de facto standard since the 1980s. The relational model imposes structure in the storage of data, and this brought about high performance and ease of use while consuming limited resources. Nonetheless, this structured storage of data also introduces rigidity. Schemas need to be defined beforehand and implementing changes afterwards can be cumbersome, especially with big datasets.

From data lakes to data swamps

In the late 2000s and early 2010s, the volumes of analytical data that organizations wished to process exploded beyond the capacity of many traditional on-premise data warehouses. The era of big data arrived and with it came data in various formats different from the structured format compatible with data warehouses. To address these challenges, the data lake was brought into existence. Data lakes can store data in any format, from structured to semi-structured to unstructured, while also supporting possibilities for real-time data ingestion. Moreover, the compute resources necessary for queries were decoupled from storage resources for the first time. This provided a stark contrast with the on-premise data warehouses in terms of scalability. Especially combined with the emergence of cloud technologies, where the ceiling for scalability is extraordinarily high and the costs of storage are low, did the data lake develop itself as the main choice for storage of analytical big data.

Organizations that needed to handle big data workloads swiftly transitioned from data warehouses towards the flexible and scalable (cloud) data lakes. Unfortunately, efficiently implementing and managing a data lake is a herculean task. Without proper design, the unstructured nature of stored data frequently brings about a performance decrease with respect to structured storage. In addition to that, bottlenecks can form because of limited capacity for parallel querying. Data lakes are better optimized for writes than for reads, since they were meant to enable organizations to continuously dump all of their data in one place without enforcing structure. Because more data with less structure produces the need for well-defined governance, many data lakes have become data swamps in absence of proper management. These data swamps make data discovery almost impossible and get in the way of successfully extracting analytical insights.

Combining the best of both worlds

In the past few years, an attempt has been made to reconcile the data warehouse and the data lake concepts by making use of modern technologies. Ideally, you would want the efficiency of the data warehouse accompanied by the flexibility of the data lake, combined into a scalable solution. Out of this ideal, the data lakehouse concept was born and is now realized on the shoulders of recent technological advances. Its power is captured in a number of key characteristics.

First of all, data in lakehouses is stored in an unstructured format, but can be efficiently queried as if it was structured (by means of ‘schema-on-read’). Even videos and images can be processed in this manner. This is achieved by storing metadata on top of files in the data lakehouse. This metadata mainly contains the past insertions and updates on these files, along with some form of schema, which allows processing engines to treat the files as ‘virtual’ tables. This allows files to be queried with SQL, just like regular tables in a data warehouse.

Secondly, a data lakehouse divides its storage and compute resources, which enables users to store their data in cheap, scalable (cloud) data lake storage and basically only pay for the compute power necessary for processing. In data lakes this was already the case, but, as discussed in an earlier paragraph, data lakes bring other problems to the table in return. Modern data warehouses also feature a decoupling of storage and compute resources but have only done so since the rise of the cloud and even so, costs of data warehouse storage are still much higher than those of data lake storage. Therefore, data lakehouses are more cost-efficient than modern data warehouses.

Furthermore, data lakehouses allow for real-time data ingestion and analysis. Previously, many data platforms had to separate real-time data flows from batch data flows. In these so-called lambda architectures, real-time data and batch data are processed separately because data warehouses traditionally don’t support real-time ingestion and analysis. The main processing engines for data lakehouses on the other hand, already feature this, which enables both real-time and batch data flows to be ingested and queried in the same way.

Lastly, data lakehouses feature Atomic, Consistent, Isolated, and Durable (ACID) transactions. This means that changes and insertions of data, very simply put, don’t get into each other’s way so that data doesn’t get corrupted. These quality guarantees for data transactions were up until now only possible in structured databases and data warehouses because of their structured nature of storage. Because data lakehouses perform their create, read, update, and delete operations not only based on data files, but also based on added metadata, they impose sufficient structure to enable ACID transactions in their processing, even though their storage is unstructured.

Current implementations

The main implementations of the data lakehouse concept right now are Delta Lake, Apache Hudi, and Apache Iceberg. Hudi and Iceberg have recently been made open source by their creators at Uber and Netflix respectively, but neither are as fleshed out as their counterpart Delta Lake. Delta Lake is, at the time of writing, the most advanced of these three and made available both open source and hosted – with extra functionalities – by Databricks, the organization that created Delta Lake. Databricks releases new features of Delta Lake at least every month, where especially in the last year we have seen multiple large additions. A recent example is Databricks SQL, which includes an SSMS-like interface for executing highly performant queries on your data lakehouse, as well as out-of-the-box connectors for most major data visualization tools such as PowerBI and Tableau. These features weren’t possible on regular data lakes, but provide a familiar UI and UX to analysts who are used to writing SQL queries on data warehouses. This enables organizations that wish to process bigger data volumes, process more various sources of data, and/or process data in real-time, to smoothly transition from the rigidity of a data warehouse to the flexibility of the data lakehouse.

Teething problems

Naturally, every new technology also brings about its own drawbacks. One of the main arguments currently held against data lakehouses is that they are not yet as refined as their counterparts. Up until now this has held back many organizations from implementing a data lakehouse. Fortunately, more and more organizations have adopted the lakehouse concept and found that it has already matured sufficiently to deliver on its promises in production scenarios. Another drawback, which we at ALTEN have already experienced ourselves, is that the current data lakehouse implementations aren’t optimized for small datasets yet, because they rely on big data processing frameworks such as Apache Spark. Fortunately, Databricks is working on their new processing engine for Delta Lake ( Photon) , which should bring about better performance for every type of workload. But, as of now, the Photon engine is still in beta and thus for the time being, using this new engine in production scenarios is discouraged. Until Photon or any other upcoming alternatives are generally available, you won’t get as much value for your money with small data workloads as with big data workloads, unless you creatively utilize the resources in your data lakehouse.

Conclusion

The data lakehouse concept is very promising and a game changer for data platforms architecture-wise. Combining the high performance of data warehouses with the scalability, cost-efficiency, and flexibility of data lakes provides possibilities for getting the best of both worlds in terms of analytical data storage and processing within modern data platforms. Of course, the data lakehouse concept has experienced some teething problems in the past, but, fortunately, it has matured at an astonishing pace which it looks to maintain in the coming years. At ALTEN, we have already successfully deployed data platforms featuring data lakehouses for our clients and will certainly continue to do so in the future. We’re eager to see where this concept is going, so we’re definitely keeping an eye out for new developments within the field, such as improved performance on small datasets and enhanced built-in capabilities for data lineage and data quality assurance.

The next parts in this series will feature modular microservices architectures for data platforms and how to harmonize data lakehouses and microservices architectures into the development of not just a modern data platform, but a futuristic data platform.

Axel van ‘t Westeinde

Consultant

Would you like to read more ‘TechTrends’? Please click here. If you want more information on the ALTEN expertises, please click here.