Algorithms… they live among us! A super market predicting which customers are pregnant. Police identifying the streets where that evening crimes will take place. A judge determining which people in custody pose a flight risk solely based on their personal data. All of them outcomes of an algorithm. All of them in use at this moment.

Artificial intelligence is progressing rapidly and evolving by the day. Its speed is so much higher than people can keep up with that many fear that in two or three decades we’re at the so-called singularity . Public figures like Stephen Hawking and Elon Musk have voiced their concerns about this.

There is, however, another risk of AI. One that we’re already smack in the middle of.

AI vs NS

What is this danger that artificial intelligence currently encourages? It’s called natural stupidity. We’re people and as people our brains are wired in such a way that our thinking is full of pitfalls. Pitfalls that collectively we call cognitive biases. Or to put it more bluntly, natural stupidity.

The current state of artificial intelligence is that all AI systems currently in use fall under the header of weak AI . This means that the algorithms focus on solving a certain type of problem, often very specific. Many people, however, treat the systems like they have human intelligence , only without human subjectivity. But is this trust in the infallibility of machines warranted? Are algorithms as objective as many think they are?

The short term danger isn’t that AI will take over the world, but that we will drop the world expecting that AI will catch it. So it isn’t only artificial intelligence we have to look out for, but also natural stupidity.

In this article I’ll discuss some of the pitfalls and where they can lead to. After that, let’s look at how we can handle this more consciously and wisely.

“It said I had to do it…”

Luckily he could climb out of his car in time, get out his suitcase, and call the emergency line. But the Ford Focus this 32 year old man had rented, had its chassis stuck between the tracks. The oncoming train couldn’t break in time and dragged the car along for tens of meters until it transformed into a fireball. The five hundred passengers on the New York train were stranded for two hours, ten other trains were delayed, and about a hundred meters of tracks were damaged.

The reason this visitor from Silicon Valley drove his car between the train tracks? His GPS instructed him to go right, so he did. The trust in his car navigation was so large that it won over what was right before his eyes.

Or take the journey undertaken by Sabine Moreau . She set off on a Saturday morning from her Belgian village southwest of Charleroi heading towards Brussels airport, a trip that normally takes about an hour. Her car navigation pointed her towards another route than usual, but no need to question that, right? Not even when she passed the German city signs of Aachen, Cologne, and Frankfurt, she had to fill up twice, had to cross the Alps, and she decided she had to take a nap because she was so tired from the journey. Only when she arrived in Zagreb, Croatia, did she realise something must’ve gone wrong, and she headed back the way she came.

Extreme cases where common sense seems to have gone completely out of the window. More and more do we trust that whatever an algorithm determines for us, will be correct. We want to trust in that, because hey, that’s why we have computers in the first place! I’ll admit, if my calculator tells me the square root of 1,999 rounded off to three decimals is 44.710, there are few moments I’m going to recalculate that by hand.

Continuous use of the computing power and memory of machines instead of those of your own has other consequences as well: it diminishes your abilities. Try and find someone who knows the numbers in his phone by heart. What you don’t practice, you no longer master. Funny, unimportant, or perhaps a bit annoying in some cases, but fatal in others. Like all occupants of flight 3407 experienced when the pilot had to take over the controls from the autopilot.

“Rules are rules”

John Gass received a letter that his driving license had been revoked, effective immediately. Panic struck this 41 year old Massachusetts native. A driver without any incidents, not a ticket in years. Why had this happened? After repeated calls he received the answer: a face recognition algorithm used for antiterrorism had flagged his photo. It had determined that his face looked so much like that of another driver that identity fraud was deemed likely. Automatically his license had been revoked. Only after ten days did he get it back, and only after he could prove that he was who he really was.

The current world is one in which algorithms become increasingly capable and are being used more and more to do what we as people find difficult or perhaps are even unable to do. But in doing so, we create a new authority figure to which it is easy to delegate responsibility .

The problem is not so much that algorithms can make mistakes. People make mistakes too. Even more so, perhaps. No, the problem is that by using algorithms the impression is that the ‘error prone,’ ‘human’ element has been taken out and that analyses are now ‘objective.’

But how do algorithms come about? In the end their source is a set of rule thought up by people. And consciously or unconsciously our assumptions and biases sneak in. But self-learning algorithms, I hear you think. Well, when you come down to it, they are being trained with data sets. And in selecting such data sets (you feel where I’m going) those assumptions and biases come into play.

Algorithms in this sense are extensions of people. The rubs in human thinking are not being brushed away, just because the steps are now being performed by machines. Moreover, they can even be enlarged.

“Create your own truth”

What do Ronaldo, Griezmann, Neymar, Suárez, and Depay have in common, apart from being part of the top of international football?

All are born in the first three months of the year. Let’s investigate the top 100 and take a closer look at the birth month. What do we notice? Grouping all birthdays into quarters we get the following chart.

We see that athletic performance and date of birth are related. A disproportionate large part of the top players is born in the first months of the year and a disproportionate small part in the last months. This relationship cannot only be found in this top 100, but in all top divisions. And not only in football, but in a whole lot of sports. It even has a name: Relative age effect .

So, do we have here the beginning of a predictive algorithm? Can we determine at birth which kids will excel in sports? That’ll save a lot of work.

Well, let’s not jump the gun. Because now it becomes easy to confuse cause and effect. The reason is not the month itself, but the grouping and selection based on it. For their first training young player are organised by calendar year. But between a child born in January 2013 and one born in December 2013 there’s a gap of almost a year. A huge difference at that age, one that can express itself in both strength and mental abilities. So, if after the first year the best players are selected for the team of stars—where they’ll get more acknowledgement and opportunities—who do you think will make up the bulk of that team? If this happens year on year, those effects have become reality, and those player are now indeed the best players.

This makes it a self-fulfilling prophecy . A process that, because of a positive feedback loop, is able to create its own truth. This is something that can happen with artificial intelligence as well. If the output of an algorithm is fed back as input to refine a prediction, a small difference, either intentionally or unintentionally, will be amplified and becomes a big difference.

Do we want algorithms to repeat the past, with all its mistakes, or do we want something else? Because skewed data sets, prejudices of developers, and logical errors lead to algorithms that amplify sexist and racist prejudices

Man vs Machine

Reading the previous paragraphs you could be forgiven for thinking I’m not an advocate of algorithms, but you’d be wrong. To solve a problem step-by-step and to automate this, is something that helps us and makes our lives easier. It’s fascinating to see how connections are exposed that first seemed invisible. It’s intriguing to experience an AI ‘doing this by itself.’ In other words, when we no longer oversee the steps with which a process is being executed. But I certainly am critical of thoughtless use of and blind trust in algorithms.

How can we use the advantages of artificial intelligence without stepping in too many of its pitfalls? It begins with understanding. Understanding what algorithms are good at and what they are not. Understanding what people are good and what they are not.

Algorithms excel in consistently ploughing through large quantities of data, and in sorting, prioritising, and classifying these. Subsequently they, or other algorithms, can filter or associate the data. In other words, finding a needle in a haystack. Like in the figure below.

Where most of us have to look a second or third time to see what is different here (and some of us still won’t see it), an algorithm doesn’t even to blink his proverbial eyes.

What’s people’s strong suit? Take a look at the image below.

In this well-known optical illusion people see a duck.. or a rabbit… or both. Once it clicks, you can see both and accept it is both. People are good at understanding context and at handling change within a context. In other words, giving meaning to a pattern. This in turn is something AIs struggle with.

The best of both worlds

So, how to continue? Renouncing algorithms and returning to past times is not a realistic option. Without algorithms no search engines—so good luck finding that webpage. What am I saying? Without algorithms there is no web and there are no computers. So much for your Netflix evening.

But hanging back and assuming the AI will fix it, is a reckless option, as we saw in the previous examples. The only way forwards is in striking the right balance between artificial and natural intelligence.

What form could such a collaboration take? By using the power of AI where you fall short, and by accepting the limitations of AI. By then taking your responsibility and by supplementing this with your human intelligence.

A good example is medical diagnostics. Algorithms are good at finding patterns. Good at trawling through thousands of images and picking out what deviates from the norm. As a person you’re likely to miss just that one dot amongst thousands of others, and that can prove to be fatal. So, what is the physician good at? At giving meaning to that deviation. When she’s alerted to a detail of an image, she can quickly judge whether it is something for which action is required or not.

Transparency for a successful collaboration

For a successful collaboration between natural and artificial intelligence it is important that algorithms are being developed and presented in a certain way. A way that best suits uniting forces and excluding misunderstandings.

In a word that’s called transparency. Transparency about what goes in and transparency about what comes out.

Transparency about the input is important so the user can assess the value of an algorithm. Suppose I use an algorithm to calculate my chances of a successful medical procedure. If I know that the relevant input for this algorithm are the letters of my name and my zodiac sign, I’ll attach a different value to it then I would’ve otherwise. Vagueness about the inner workings of an algorithm—and especially of a bad algorithm—can lead to a lot of problems, as an infamous case about medical assistance in Idaho showed.

Transparency about the output is important to stop a user blindly staring at only one outcome. Without further details an outcome can have an air of certainty that is unwarranted. If an algorithm determines of a cluster of cells that it is malignant with a certainty of 50.1%, would you like your doctor to know this percentage before proceeding to operate?

It’s all about people

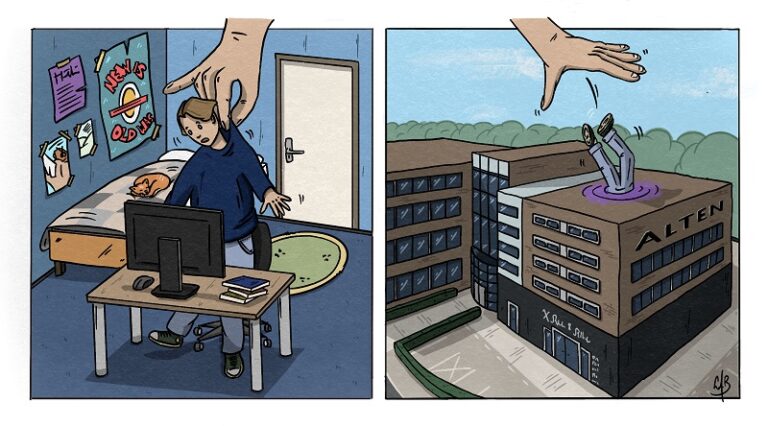

Perhaps the tipping point has passed us already and decoupling intelligence from consciousness will lead to a dramatic transformation of humankind, as Yuval Noah Harari argues in his book Homo Deus. But let’s deal with this development wisely and direct its course as much as we can.

It starts with us, right now, at this moment. With us as professionals in the digital world who develop algorithms and apply them in technical solutions. With us all as members of a society in which algorithms play an ever-increasing role and shape our lives. Our lives, because it’s all about people.

Inspiration and further reading

Brian Christian & Tom Griffiths, Algorithms to Live By: The Computer Science of Human Decisions

Hannah Fry, Hello World. How to Be Human in the Age of the Machine

Yaval Noah Harari, Homo Deus: A Brief History of Tomorrow

Marcus du Sautoy, The Creativity Code: How AI Is Learning to Write, Paint, and Think

Gary Smith, The AI Delusion